Projects

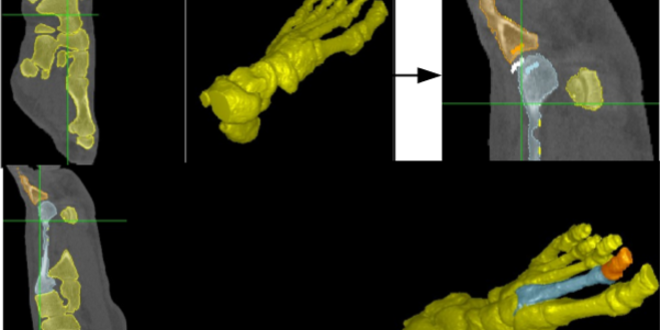

Interactive Object Shape Modeling in Medical Image Segmentation

Model-based image segmentation is an important and active field of research in medical imaging. The developed approaches rely on constructing static mathematical representations of an object of interest, by learning the object’s shape from a data set containing previously segmented instances of the object in training images. Those data sets are frequently produced using manual, not interactive, tools, and very often contain errors due to the tiresome and tedious annotation process, which is usually disregarded by the literature as an important initial step. Hence, those issues limit the capacity for creating large annotated data sets that would allow the robust computation of the object shape models. We are interested in developing dynamic object shape models to aid in the annotation process, by having the model learn from the user’s corrections of previously segmented images to facilitate the annotation of new unsegmented training images.

Team:

Alexandre Xavier FalcãoJayaram K. Udupa

Krzysztof Chris Ciesielski

Paulo A. V. de Miranda

Thiago Vallin Spina